By Rajiv Pant and Devesh Raj (Originally published: February 10, 2025 – Updated: February 16, 2025)

ChatGPT’s arrival in late 2022 marked a turning point in how we interact with artificial intelligence. Business leaders and technologists now face a pressing challenge: explaining these systems to stakeholders who need to understand their capabilities and limitations. During a recent conversation, we explored a way of thinking about large language models that builds on familiar concepts to illuminate their inner workings.

The Restaurant Game: An Analogy for AI

Picture a restaurant during a busy evening service. Throughout the space, different groups are playing various word games that have entertained people for generations. At one table, friends engage in simple word association – when someone says “blue,” the next person might say “sky,” following whatever connection comes to mind. At another table, professors play a creative writing game invented by surrealist artists, where each person writes a line of a story without seeing what came before, creating unexpectedly delightful or bizarre narratives. And at a third table, improvisational actors build stories together, each person adding new elements while maintaining the coherence of the overall narrative.

Now imagine these different approaches to collaborative language generation merging into something new. The entire restaurant becomes engaged in building sentences together, but with a crucial difference from traditional word games: Instead of free association or hidden context, each participant draws upon everything they’ve read and learned to predict the most probable next word.

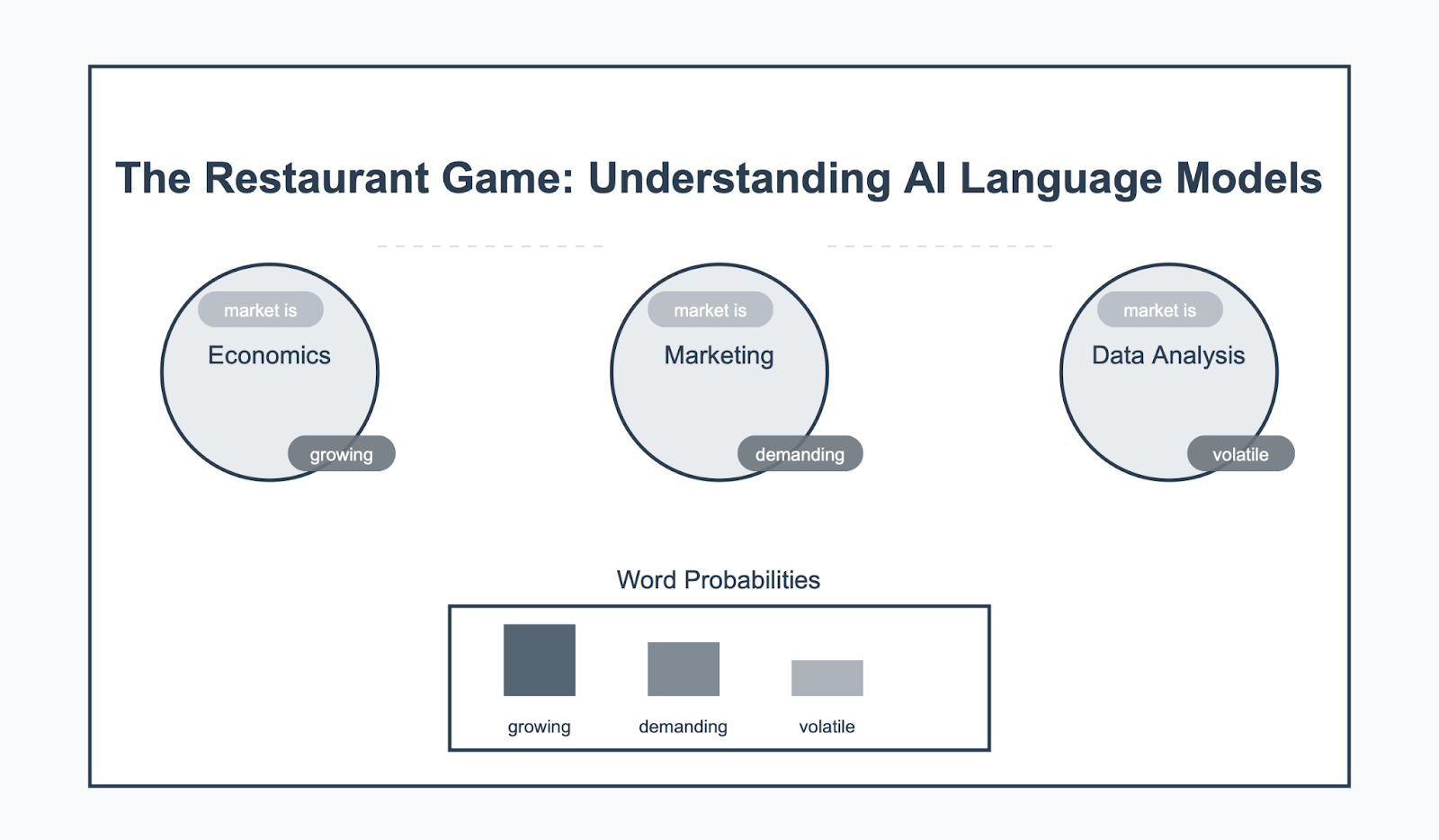

When someone begins with, “The market is…”, an economist recalls patterns from past reports and trends. “Growing” might feel like the safest choice, supported by years of analysis and historical data. But they might also consider “volatile” or “declining”, influenced by recent events or their interpretation of the current economic climate. Each potential word carries a probability, shaped by the economist’s unique knowledge and experience.

Meanwhile, a marketing professional at the next table might interpret the same phrase differently. Their perspective is shaped by campaign data, customer insights, and evolving consumer trends. For them, “demanding” or “competitive” might come to mind, reflecting their focus on customer behavior and market positioning. Each choice reflects not only the marketer’s expertise but also the distinct probabilities derived from their accumulated understanding.

This collaborative prediction game mirrors how language models process and generate text. When GPT-4 or Claude encounters “The market is”, they too calculate probabilities based on their training data – much like our restaurant patrons draw upon their reading history. The key difference? The AI systems can process billions of examples simultaneously, while maintaining precise probability calculations for each possible next word.

Finding the Right Expert: The Restaurant Host’s Role

Recent developments in AI, like DeepSeek’s mixture of experts approach, add another layer to our restaurant analogy. Imagine a skilled restaurant host who, upon hearing a question, doesn’t ask every patron but instead directs it to the most relevant table of experts. When someone asks about market trends, the host immediately turns to the economists’ table. For a question about brand positioning, they consult the marketing professionals.

This targeted approach makes the process more efficient and often produces better results than asking everyone in the restaurant. It’s similar to how newer AI models identify and activate the most relevant “expert” components within their architecture for each specific task, rather than engaging their entire neural network for every question.

How Language Models Predict Words

The game becomes more intriguing when we consider context. Just as our restaurant patrons don’t simply react to the last word spoken, language models maintain awareness of the entire conversation. A sentence beginning “The dog was” creates expectations that narrow the field of likely next words. “Barking” or “sleeping” become high-probability choices, while “photosynthesizing” drops to near zero probability – unless previous context established we’re discussing science fiction or surrealist literature.

We’ve seen this dynamic play out in improvisational theater exercises for decades. Good improv actors maintain awareness of the entire scene while adding their contributions. They work with probability too – choosing responses that are likely enough to make sense but unexpected enough to drive the story forward. Language models perform a similar balancing act between predictability and surprise.

This balance becomes crucial in business applications. When generating standard business correspondence, we might want our AI systems to behave like conservative players in our restaurant game – always choosing the most statistically likely options. But when brainstorming new product ideas or crafting creative marketing copy, we might adjust the system to occasionally select less probable but more innovative choices.

The historical precedents for collaborative language games offer insights into how we might better use AI language models. Victorian parlor games like Consequences demonstrated how partial information and structured collaboration could create engaging narratives. Word association games revealed patterns in human semantic networks long before we built artificial ones. Group storytelling exercises showed how constraints can paradoxically enhance creativity.

Modern language models build upon these insights. They combine the pattern recognition of word association, the contextual awareness of improv theater, and the structured collaboration of parlor games – all powered by unprecedented computational resources and training data.

What It Means for Business Leaders

Consider how this understanding might influence business decisions about AI implementation. When a company trains a language model on industry-specific data, they’re essentially adding a new domain expert to our metaphorical restaurant. When they adjust the temperature settings of their AI system, they’re shifting between conservative and creative play styles in our game.

The changes in business communication since late 2022 reflect this new understanding. Companies increasingly craft their prompts like skilled game moderators, setting up contexts and constraints that guide AI systems toward desired outcomes. They’re learning to balance between the predictability needed for standard business processes and the creativity required for innovation.

Looking ahead, this perspective suggests several developments in human-AI interaction. Just as different tables in our restaurant offer different kinds of expertise, we’re likely to see more specialized AI models trained for specific industries and tasks. The fluid way our restaurant patrons maintain context and switch between domains points toward more sophisticated AI systems that can better understand and maintain context across conversations.

When you next interact with an AI language model, think of our restaurant scene. Behind each response lies a process similar to our collaborative game – a vast calculation of probabilities, informed by extensive training data, maintaining context while balancing between predictability and innovation. This mental model helps bridge the gap between the familiar human experience of language and the mathematical reality of AI systems.

The rapid evolution of AI technology can make it seem alien and incomprehensible. But at its core, it builds upon patterns of language and collaboration that humans have explored through games and exercises for generations. Understanding these connections can help us better grasp both the capabilities and limitations of our new AI tools.

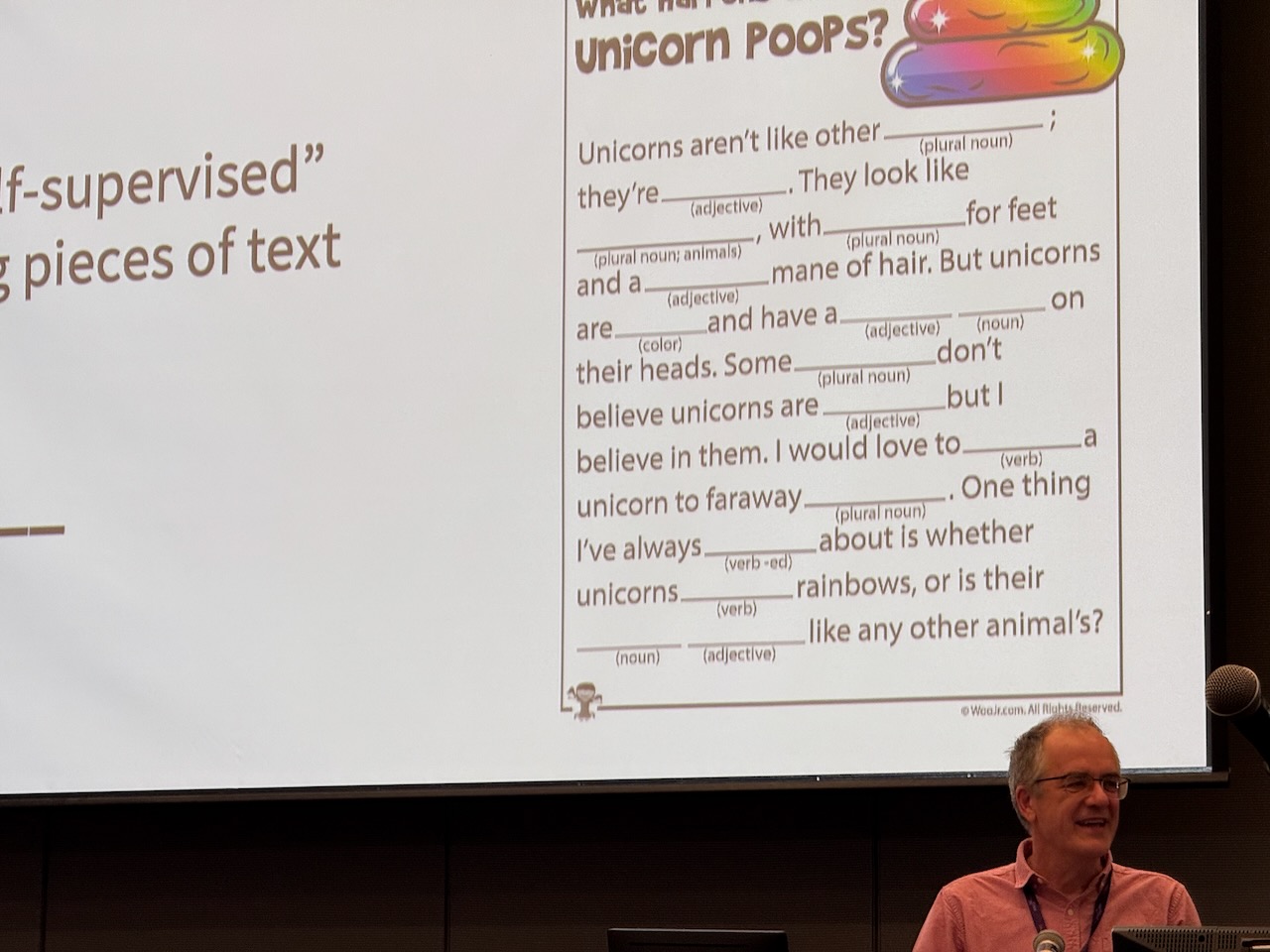

Update (February 16): AI and Mad Libs? A Parallel with Professor Christopher Manning

At Stanford HAI’s First Workshop on Public AI Assistants and World-Wide Knowledge (WWK), Professor Christopher Manning, one of the world’s foremost experts in Natural Language Processing, used an insightful example to explain how LLMs learn. His slide, structured like a Mad Libs-style fill-in-the-blank exercise, visually demonstrated how models predict missing words based on context. It was strikingly similar to my “Culinary Code” analogy, reinforcing the idea that AI learns by recognizing patterns in data, much like how chefs follow and refine recipes over time.

This parallel further strengthens our belief that using intuitive metaphors—whether drawn from food, childhood word games, or language itself—helps demystify AI for a broader audience. Seeing these ideas resonate at the event was a powerful reminder of how effective analogies can be in explaining complex systems.

Professor Christopher Manning, a leading expert in Natural Language Processing and AI, presenting at Stanford HAI’s “First Workshop on Public AI Assistants and World-Wide Knowledge (WWK)” in February 2025. His talk included an example illustrating how language models learn patterns in text, similar to how we described AI’s “Culinary Code” in this blog post.

Professor Christopher Manning, a leading expert in Natural Language Processing and AI, presenting at Stanford HAI’s “First Workshop on Public AI Assistants and World-Wide Knowledge (WWK)” in February 2025. His talk included an example illustrating how language models learn patterns in text, similar to how we described AI’s “Culinary Code” in this blog post.

AI Research and the First WWK Workshop at Stanford HAI

This workshop marked Stanford Human Centered Artificial Intelligence (HAI)’s first dedicated event on public AI assistants, signaling a growing focus on democratizing AI and making it more accessible. As one of the speakers at the event, I had the opportunity to contribute to discussions on AI’s evolving role in human communication—a theme closely tied to the reflections in this post.

The event brought together researchers, industry leaders, and practitioners to explore the trajectory of AI-driven knowledge systems. Being part of this milestone conversation reaffirmed how rapidly AI is advancing—and how discussions like these help shape the future of AI accessibility, usability, and governance.

For those interested in the event, more details can be found here , and the Day 1 agenda is available here .

Closing Thoughts

Understanding AI doesn’t require a technical background—it requires the right lens. Whether we think of AI as a chef, a storyteller, or a game of Mad Libs, the key is recognizing that these models are predictive engines, trained to recognize and generate patterns based on vast linguistic data. Events like the WWK workshop at Stanford HAI help push these conversations forward, making AI more transparent and accessible to all.

We look forward to continuing these discussions—both in upcoming blog posts and at future industry events.

About the authors:

Rajiv Pant is President at Flatiron Software and Snapshot AI , where he leads organizational growth and AI innovation while serving as a trusted advisor to enterprise clients on their AI transformation journeys. With a background spanning CTO roles at The Wall Street Journal, The New York Times, and other major media organizations, he brings deep expertise in language AI technology leadership and digital transformation.

Devesh Raj is Chief Operating Officer at Sky in the UK, and leads Sky’s day-to-day operations. Previously, he was CEO of Sky in Germany, Head of Corporate Strategy at Comcast-NBCUniversal and led BCG’s TMT practice.

Rajiv and Devesh are both alumni members of the World Economic Forum ’s Community of Young Global Leaders .