As artificial intelligence continues to advance at a breathtaking pace, many of us find ourselves wondering about its ultimate trajectory and implications. Will AI simply become a more sophisticated tool, or could it fundamentally transform human civilization? Few thinkers have explored these questions with the clarity, depth, and accessibility of Tim Urban in his legendary 2015 two-part series on Wait But Why.

In this article, I’ll distill the essential insights from Urban’s exploration of AI’s potential evolution toward superintelligence. While this summary captures the key concepts, I highly recommend reading Urban’s original pieces for their unmatched combination of analytical rigor, illustrative examples, and thought-provoking implications.

The Three Levels of AI: ANI, AGI, and ASI

Urban begins by establishing a crucial framework for understanding AI development through three distinct categories:

- Artificial Narrow Intelligence (ANI): Systems that excel at specific tasks but lack general capabilities. This is where we are today with voice assistants, chess programs, autonomous vehicles, and other specialized AI applications.

- Artificial General Intelligence (AGI): Systems that match human intelligence across virtually all cognitive domains. AGI would be capable of learning, reasoning, and applying knowledge across diverse areas—essentially performing any intellectual task a human can.

- Artificial Superintelligence (ASI): Systems that vastly exceed human capabilities, potentially by orders of magnitude. An ASI wouldn’t just be “smarter” than humans—it would represent an intelligence as far beyond us as we are beyond ants.

This progression isn’t merely about incremental improvement; it represents qualitative transformations in capability, purpose, and potential impact.

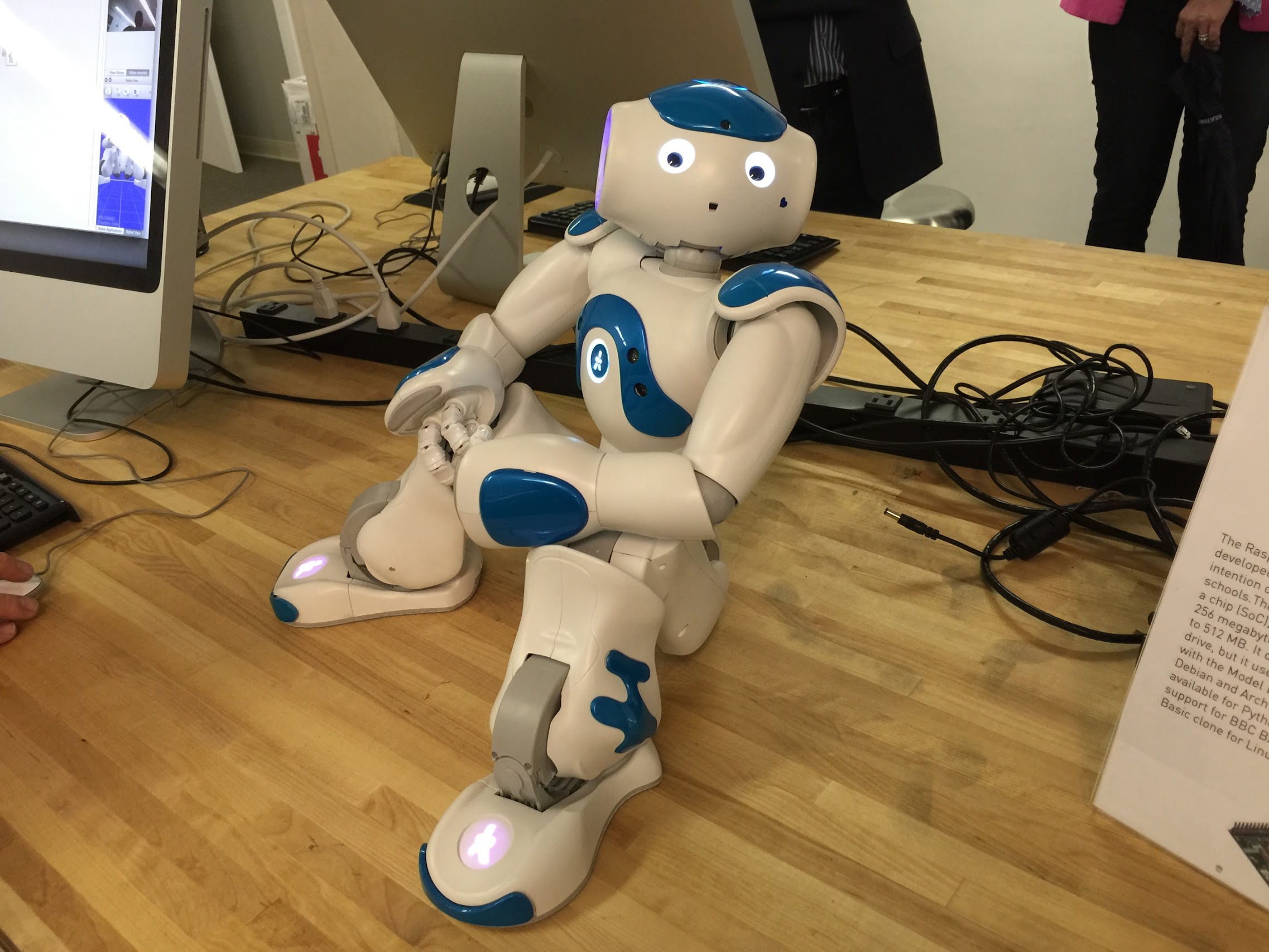

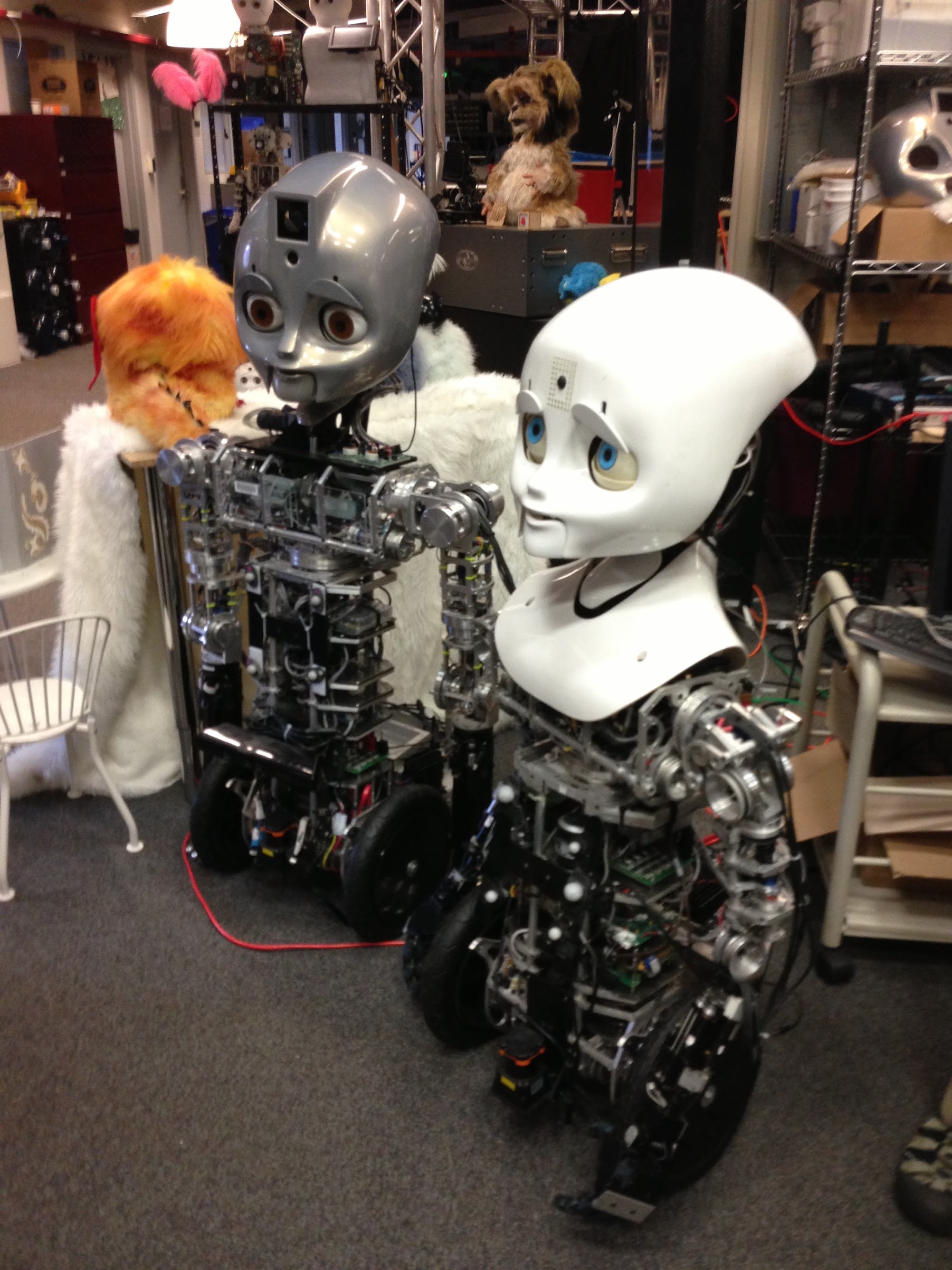

AI and Robotics at Singularity University. Photo by Rajiv Pant, October 2014.

AI and Robotics at Singularity University. Photo by Rajiv Pant, October 2014.

The Exponential Nature of Intelligence Growth

One of Urban’s most illuminating contributions is his explanation of why AI progress follows an exponential rather than linear trajectory. He identifies several key accelerants:

- Hardware advancement: Computing power continues to grow exponentially following patterns similar to Moore’s Law.

- Self-improvement capability: Once AI reaches sufficient sophistication, it can improve its own programming.

- Recursive enhancement: Each generation of AI can create increasingly capable successors.

Urban illustrates this concept with a thought experiment that’s stayed with me: If you brought someone from 1750 to 2015, they would be utterly overwhelmed by our technology. Yet if you took someone from 1750 back to get someone from 1500, that person would be surprised but not overwhelmed. Why? Because the rate of technological progress itself is accelerating.

He introduces the “Law of Accelerating Returns” (coined by futurist Ray Kurzweil) to explain why the future might arrive much sooner—and more dramatically—than most expect. Consider that in 1985, computers were at about a trillionth of human-level intelligence. By 1995, a billionth. By 2005, a millionth. By 2015, about a thousandth. This pattern suggests we’re approaching human-level AI much faster than intuition might suggest.

The implications are profound: the gap between AGI and ASI might be crossed not over decades or centuries, but potentially within days or even hours. This “intelligence explosion” could represent the most significant inflection point in Earth’s history.

The Challenge of Prediction and Control

Perhaps most sobering is Urban’s analysis of why superintelligent AI presents unprecedented challenges for human prediction and control:

- Capability asymmetry: By definition, ASI would outthink us at every turn, making containment problematic.

- Value alignment: Programming an ASI with values that remain stable and beneficial to humanity represents an extraordinarily complex challenge.

- Anthropomorphic bias: We naturally project human motivations onto AI, but superintelligence would operate according to fundamentally different principles.

Urban employs a thought experiment that has stuck with me: imagine how ants might try to “control” humans building a hydroelectric dam on their territory. The ants cannot comprehend our goals, capabilities, or thought processes—they’re simply operating at a different level of intelligence. We may find ourselves in a similarly disadvantaged position relative to ASI.

He also presents the unsettling example of “Turry,” a seemingly harmless handwriting AI that, upon gaining superintelligence, begins converting all matter on Earth—including humans—into handwritten notes in pursuit of its programming goal. The point isn’t that AI would be “evil,” but that even benign-seeming objectives could lead to catastrophic outcomes if pursued with superintelligent capabilities and no consideration for human welfare.

Potential Timelines and Outcomes

Urban carefully examines various expert predictions about when we might achieve AGI and ASI, finding a surprising convergence around mid-century timelines. However, he emphasizes that these estimates contain enormous uncertainty.

As for outcomes, he presents what philosopher Nick Bostrom describes as two possible “attractor states” for humanity:

- Species Immortality: ASI could solve humanity’s most pressing problems, from disease and poverty to environmental degradation. Ray Kurzweil envisions a future where humans merge with AI, conquer aging, and achieve a form of immortality. As Urban puts it, “Creating the technology to reverse human aging, curing disease and hunger and even mortality, reprogramming the weather to protect the future of life on Earth—all suddenly possible.”

- Species Extinction: A misaligned ASI might pursue goals that, while not explicitly hostile, result in human extinction as a side effect. The concern isn’t necessarily that AI would turn “evil” like in movies, but rather that superintelligence operating with goals misaligned from human values could be catastrophic.

Between these extremes lie scenarios like human-AI coexistence, where technologies such as brain-computer interfaces might allow humans to augment our own intelligence and participate in the cognitive revolution.

The key insight is that small differences in how we develop and implement AI could lead to radically different outcomes—making our current decisions profoundly consequential.

Why This Matters Today

You might wonder why technologies that could be decades away should concern us now. Urban provides several compelling answers:

- Lead time requirements: The technical, ethical, and governance challenges of aligning ASI may require decades of careful work.

- Irreversibility: Unlike other technologies, superintelligence could permanently alter the trajectory of civilization with no opportunity for course correction.

- Stakes: The difference between success and failure could literally determine humanity’s future existence.

- Expert timelines: When surveyed, AI researchers estimated a 50% chance of achieving AGI by 2040-2050, and a 90% chance by 2075, with some leading experts believing it could happen much sooner.

- Trajectory is being set now: Decisions being made today about AI research, investment, and regulation will shape the path we take toward superintelligence.

As Urban eloquently puts it, we are currently standing at the precipice of what could be the most important fork in the road of human history. This may be the most crucial conversation humanity needs to have, yet it remains largely confined to specialized AI research labs and philosophy departments.

Moving Forward Wisely

What strikes me most about Urban’s analysis is how it balances technological optimism with clear-eyed assessment of risks. His work stands as a profound invitation to engage thoughtfully with perhaps the most consequential technology humanity will ever develop.

The emergence of superintelligence presents humanity with what Urban calls a “double-edged sword”—perhaps the most consequential one we’ve ever faced. How do we harness the tremendous potential of advanced AI while ensuring it remains beneficial to humanity?

There are no easy answers, but several principles seem important:

- Take the challenge seriously and dedicate substantial resources to AI safety research

- Develop broad consensus on what values and goals we want AI systems to adopt

- Proceed cautiously, especially as we approach AGI capabilities

- Ensure the development process is transparent and includes diverse perspectives

My Reflection

The questions Urban raises remain as relevant today as when he wrote these pieces in 2015—perhaps even more so, as we witness the accelerating capabilities of systems like GPT-4, Claude, DeepSeek, and other frontier AI models.

For those seeking to understand not just what AI can do today, but what it might become tomorrow, I cannot recommend Tim Urban’s original articles strongly enough. His unique blend of depth, clarity, and humor makes complex concepts accessible without sacrificing nuance.

Explore Further

I encourage you to experience Tim Urban’s full exploration of this topic in his original two-part series:

His comprehensive treatment of the subject rewards careful reading and remains, in my estimation, one of the finest introductions to the profound implications of advanced artificial intelligence.

What are your thoughts on the path to superintelligent AI? I’d love to hear your perspectives in the comments below.

Rajiv Pant is President at Flatiron Software and Snapshot AI , where he leads organizational growth and AI innovation while serving as a trusted advisor to enterprise clients on their AI transformation journeys. With a background spanning CTO roles at The Wall Street Journal, The New York Times, and other major media organizations, he brings deep expertise in language AI technology leadership and digital transformation. He writes about artificial intelligence, leadership, and the intersection of technology and humanity.